Speaking with the Woman Behind the Facebook Health Data Breach Complaint

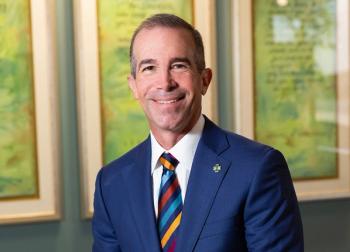

Janae Sharp and community data organizer Andrea Downing discuss the leak’s implications for healthcare and patients.

Government inquiries might ultimately decide data security and privacy rights in technology. In public spaces online, we assume a certain level of privacy, but this assumption has proven flawed. According to

According to the 2019

I spoke to Andrea Downing, founder of the breast cancer data blog Brave Bosom and one of the activists who worked to reveal a security flaw with Facebook group data. While the FTC inquiry covers a broad spectrum of data security, her work dealt with Facebook groups. Downing showed that, essentially, all of the groups on Facebook, even if they were private or secret, had a back door where sleuths could find membership information and personal data. In groups related to health conditions or political affiliation, this could have serious privacy and safety concerns.

(Downing and health IT security expert Fred Trotter filed a complaint against Facebook in connection with the data breach. Facebook executives have added new health privacy controls said they’re

I asked Downing about how she started looking at Facebook privacy concerns and what she did to lead change.

Janae Sharp: What made you even think about looking up whether personal user group data could be accessed on Facebook?

Andrea Downing: I have been involved in Facebook support groups since 2012 — the largest group that I co-moderate, I got involved in 2013 or 2014. I got involved after testing revealed that I have a genetic mutation that makes me at a higher risk for breast cancer, the BRCA mutation. From my own experience, if you are someone with a rare BRCA mutation, your only real option if you’re seeking a sizeable group of people to discuss it with is to go to Facebook groups. Everyone ended up going to Facebook.

My first foray into activism as someone with the BRCA mutation was in writing about a case that came before the Supreme Court before being remanded back to Federal Court in 2013, Association of Molecular Pathology vs. Myriad Genetics, Inc. This was an incredibly important case for cancer research. Essentially, its outcome determined that isolated genes are found in nature and therefore are not patentable. This was important because human genetic information is protected. I was a media spokesperson for breast cancer action. I wanted to make sure patient privacy was protected, including human genome information not being made property.

In the process of following the case, I learned about data sharing and how BRCA fit into the bigger picture of genetic testing and how diagnostic companies make money. In the aftermath of the case, discussions ensued in these various Facebook groups, and the demand for genetic testing increased. People were more aware of the availability of these tests — they started seeing genetic counselors and asking physicians more complex questions. Meanwhile, I was struggling to get support to navigate my own experience, and as I connected with other women through Facebook groups, I realized that my experience trying to connect with an online community was not uncommon.

J.S.: Tell me what made you think about this from a technology perspective. What drove you to explore?

A.D.: I started my career at a company in Silicon Valley called Salesforce. I love tech. I always look at data integration and APIs and how data flows. I worked with developers, so I think constantly about how data works.

My interest in Facebook groups started around the time of the Cambridge Analytica security inquiry, where they are

J.S.: What can you find out from Facebook? I know that marketers are able to set up really customized groups. I didn’t know that was related to closed Facebook groups.

A.D.: From reading technical blogs and write-ups, I learned that you can scrape the names of real people in the group from outside the group. You could scrape their locations, their employers, their email addresses and their disease status.

If you are a marketer, it is kind of simple. You want to connect with people who are interested in your product. And marketers are used to data that is very customized to each technology user. However, if you are not thinking in terms of marketing or a technical background, then you don’t really know what is going on. If you are just there to connect with other people during a traumatic experience, you are vulnerable, and you may not know to ask the right questions.

As someone who was embedded in those groups, I thought, “Maybe I should ask the same technical questions of Facebook that I would normally ask for myself.”

J.S.: What are those questions that we need to be asking?

A.D.: The questions were how much information I can get and whether it leaves people vulnerable.

- I looked at the Facebook API. I was able to get a developer account.

- I realized there was a group API that has user documentation and user data from entire groups — the list of every user, their email address, their home address — even for private groups.

- I looked at the data that someone hypothetically could scrape and whether it could compromise its owners.

The bigger concern about it: What we can scrape from Facebook as a developer or in a

strict inclusion criteria groups reverse lookup attack? So can I look up all the women in a closed group and find their email addresses? I could specify that I wanted to look up specific criteria and get personal data. For health groups, this means your personal health data is easy to look up.

The scariest part is that anyone can go to the API on Facebook. You just set up a developer account and go to the API.

J.S.: So accessing data thought to be private was very possible. Was it easy to access? What constitutes a data attack?

A.D.: People tend not to think about what you can do with that kind of information unless they have worked in that type of role before. I knew just enough that, if I’d had malicious intent, I could have been dangerous with these people’s private data in hand.

If you think about patient support groups, you realize that experts in patient engagement haven’t been talking about the implications of having closed groups on Facebook and what that means for patient privacy. Most users of these groups don’t really know that such groups are not secure and that posting our health identity in a closed group in no way guarantees privacy.

The more I learned about the situation, the scarier it got. I connected with

J.S.: Why did you connect with Fred?

A.D.: I wanted to know if what I found was an actual security threat. I wanted to check my technical work and knew he had credibility in the space. I needed another set of eyes for a security breach report. I had done some risk modeling and wanted him to check it.

J.S.: What do you mean? What does a security breach risk model look like?

A.D.: You have to look at the scope of the vulnerability and what type of data you can look up.

I found out you could write code that searches for a specific disease.

In putting together the report, part of responsible disclosure is threat modeling, asking questions such as:

- If you have an exploit, what is the vulnerability?

- How can you reproduce the threat to security or the security attack?

- What is the potential harm if the exploitation occurs?

In a group like my BRCA Facebook group, it is a slow bleed. We can’t always track the harm back to us. However, the threat is real; data aggregators and pharma companies or even employers who want to scrape these lists and look at carriers could deny us jobs or deny us healthcare.

J.S.: What did Fred tell you to do?

A.D.: Fred told me that we shouldn’t make it public. When you get into consumer data risk modeling, it isn’t something that people on the platforms are aware of. For my community, it is illegal. It can be life-threatening.

J.S.: Why is it so dangerous? I understand not discriminating against people based on a disability or a genetic variation, but when does it become life-threatening?

A.D.: There are vulnerable groups out there where the stakes and the danger are much higher. In theory, I didn’t understand this. I was tasked to go look at groups like gay men who live in Iran.

If you are known by the government and they can find you on Facebook, they could scrape the group and round you up and kill them.

J.S.: Have groups been outed in the past? Do we know of instances where this security vulnerability was used to target people?

A.D.: There can be a lot of damage to careers and reputations. There have been groups that have been outed.

We found groups such as some in the Rohingya, where people are facing

J.S.: So you decided not to go to the press. What does someone do when they uncover this type of vulnerability?

A.D.: We wrote a vulnerability report. Matt Might has a son with a rare disease and a background in cybersecurity. We submitted it, and the first reaction was that they said there was no problem. They said it looks like a new feature request.

They first said it was not an admission of liability. If they had admitted liability, they would have to follow the law around health data breaches. You have a responsibility to follow FTC laws and settlements, such as not making non-public information public. Also, if health data is exposed in a public way, they need to notify the people who have been affected.

J.S.: Does the breach still exist?

A.D.: Facebook shut down the API on April 4 and changed its privacy settings last July. They closed the most dangerous part, but it still isn’t completely fixed. They did not follow reporting requirements for a security breach.

J.S.: Where do you go from here?

A.D.: For me, the implication is that I want to get my group to a safe space. I want that to be off Facebook. In order for there to be trust, we have to have security.

We want a secure backup of our data, from our own perspective and zooming out to other groups. We want something groups can do about it.

Is your security and the potential harm more important than the convenience?

J.S.: What do you want people to know?

A.D.: I want people to know why it is important to get groups off Facebook. I want to share what we know with others. If you are a patient, you will do what you need to do to stay alive. You are forcing people into an unethical situation.

Why the Facebook Health Data Breach Matters

We cannot always convene in public spaces online, and those spaces that look private should stay private. The decision to reach out to technology companies has a mixed response. They are likely well trained in not admitting liability. In the case of the vulnerability that Downing uncovered, patients were not notified of the breach of their data. Health groups with protected conditions that convene in public spaces have found that their status in a “secret” group is not always secret. While the specific security breach was closed, the question about data privacy and responsibility remains a problem.

Get the best insights in healthcare analytics

More on Health Data Privacy